One of the biggest applications of Web Scraping is in scraping restaurant listings from various sites. This could be to monitor prices, create an aggregator, or provide better UX on top of existing hotel booking websites.

Using BeautifulSoup. BeautifulSoup is widely used due to its simple API and its powerful extraction capabilities. It has many different parser options that allow it to understand even the most poorly written HTML pages – and the default one works great. Mar 10, 2021 Learn web scraping with Python with this step-by-step tutorial. We will cover almost all of the tools Python offers to scrape the web. From Requests to BeautifulSoup, Scrapy, Selenium and more.

Here is a simple script that does that. We will use BeautifulSoup to help us extract information and we will retrieve hotel information on Zomato.

To start with, this is the boilerplate code we need to get the Zomato search results page and set up BeautifulSoup to help us use CSS selectors to query the page for meaningful data.

We are also p[assing the user agent headers to simulate a browser call so we dont get blocked.

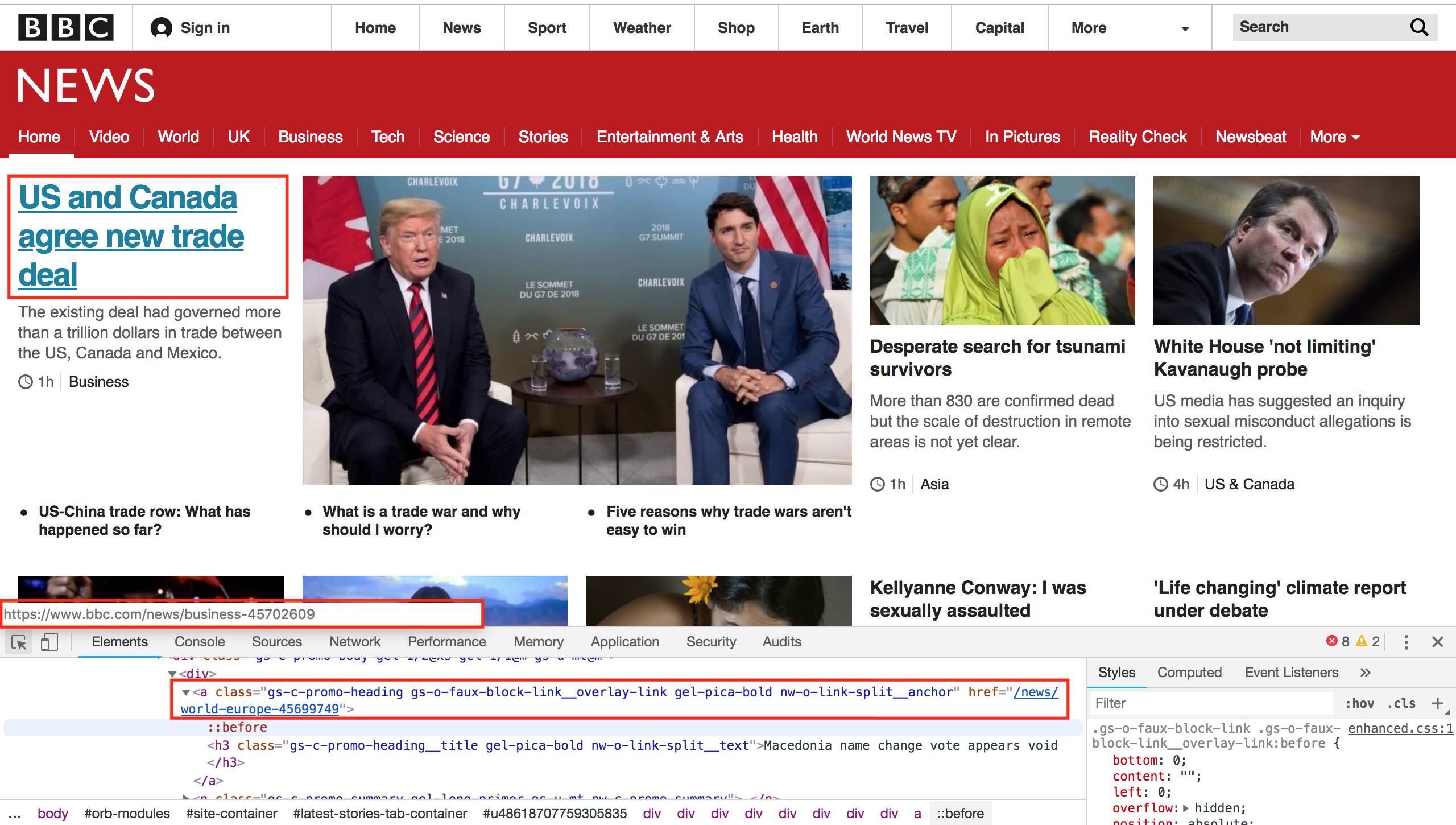

Now let's analyze the Zomato search results for a destination we want. This is how it looks.

And when we inspect the page we find that each of the items HTML is encapsulated in a tag with the class search-result.

We could just use this to break the HTML document into these parts which contain individual item information like this.

And when you run it..

You can tell that the code is isolating the cards HTML.

On further inspection, you can see that the name of the restaurant always has the class result-title. So let's try and retrieve that.

That will get us the names...

Bingo!

Now let's get the other data pieces...

And when run..

Produces all the info we need including ratings, reviews, price, and address.

In more advanced implementations you will need to even rotate the User-Agent string so Zomato cant tell its the same browser!

If we get a little bit more advanced, you will realize that Zomato can simply block your IP ignoring all your other tricks. This is a bummer and this is where most web crawling projects fail.

Overcoming IP Blocks

Investing in a private rotating proxy service like Proxies API can most of the time make the difference between a successful and headache-free web scraping project which gets the job done consistently and one that never really works.

Plus with the 1000 free API calls running an offer, you have almost nothing to lose by using our rotating proxy and comparing notes. It only takes one line of integration to its hardly disruptive.

Our rotating proxy server Proxies API provides a simple API that can solve all IP Blocking problems instantly.

- With millions of high speed rotating proxies located all over the world,

- With our automatic IP rotation

- With our automatic User-Agent-String rotation (which simulates requests from different, valid web browsers and web browser versions)

- With our automatic CAPTCHA solving technology,

Hundreds of our customers have successfully solved the headache of IP blocks with a simple API.

The whole thing can be accessed by a simple API like below in any programming language.

We have a running offer of 1000 API calls completely free. Register and get your free API Key here.

In this segment you are going to learn how make a python command line program to scrape a website for all its links and save those links to a text file for later processing. This program will cover many topics from making HTTP requests, Parsing HTML, using command line arguments and file input and output. First off I’m using Python version 3.6.2 and the BeautifulSoup HTML parsing library and the Requests HTTP library, if you don’t have either then type the following command to have them installed on your environment. So let’s get started.

Now let’s begin writing our script. First let’s import all the modules we will need:

Line 1 is the path to my virtual environment’s python interpreter. On line 2 we are import the sys module so we can access system specific parameters like command line arguments that are passed to the script. Line 3 we import the Requests library for making HTTP requests, and the BeautifulSoup library for parsing HTML. Now let’s move on to code.

Here we will check sys.argv, which is a list that contains the arguments passed to the program. The first element in the argv list(argv[0]) is the name of the program, and anything after is an argument. The program requires a url(argv[1]) and filename(argv[2]). If the arguments are not satisfied then the script will display a usage statement. Now let’s move inside the if block and begin coding the script:

On lines 2-3 we are simply storing the command line arguments in the url and file_name variables for readability. Let’s move on to making the HTTP request.

On line 5, we are printing a message to the user so the user knows the program is working.

Web Scraping In Python Beautifulsoup

On line 6 we using the Requests library to make an HTTP get request using requests.get(url) and storing it in the response variable.

On line 7 we are calling the .raise_for_status() method which will return an HTTPError if the HTTP request returned an unsuccessful status code.

On line 1 we are calling bs4.BeautifulSoup() and storing it in the soup variable. The first argument is the response text which we get using response.text on our response object. The second argument is the html.parser which tells BeautifulSoup we are parsing HTML.

On line 2 we are calling the soup object’s .find_all() method on the soup object to find all the HTML a tags and storing them in the links list.

On line 1 we are opening a file in binary mode for writing(‘wb’) and storing it in the file variable.

On line 2 we are simply providing the user feedback by printing a message.

On line 3 we iterate through the links list which contains the links we grabbed using soup.findall(‘a’) and storing each link object in the link variable.

On line 4 we are getting the a tag’s href attribute by using .get() method on the link object and storing it in the href variable and appending a newline(n) so each link is on its own line.

On line 5 we are printing the link to the file. Notice that were calling .encode() on the href variable, remember opened the file for writing in binary mode and therefore we must encode the string as a bytes-like object otherwise you will get a TypeError.

On line 6 we are closing the file with the .close() method and printing a message on line 7 to the user letting them know the processing is done. Now let’s look at the completed program and run it.

Web Scraping In Python By Beautifulsoup Scrapy And Selenium

Now all you

have to do is type this into the command line:

Output:

Now all you have to do is open up the links file in an editor to verify they were indeed written.

And that’s all there is to it. You have now successfully written a web scraper that saves links to a file on your computer. You can take this concept and easily expand it for all sorts of web data processing.

Further reading: Requests, BeautifulSoup, File I/O